nDreams, a UK based games studio producing upcoming VR title The Assembly, are declaring war on the discomfort commonly associated with first-person VR games. We sat down with Senior Designer Jackie Tetley and Communications Manager George Kelion at Gamescom 2015 to hear more about the game’s new ‘VR comfort mode’.

Without a HUD, or a cockpit to help obscure some of the vection-inducing motion that can truly turn the stomachs of the best of us, first-person gameplay in VR is still pretty dicey for many. Traditional controls, although right for some who have already developed what’s commonly called “VR legs,” aren’t for everyone, and can bring on the dreaded ‘hot sweats’ that precede sim-sickness.

“I’m happy to say we’re on the forefront of trying to tackle these problems head on. In three or four years time we’ll be able to look back and all of this will be a memory,” Kelion said.

![The Assembly - Screenshot - 06 - CH1]()

nDreams, developers of the upcoming multi-headset game The Assembly, are keenly aware that the quickly approaching consumer-level VR headsets will bring with them VR veterans and first-timers alike. In response, they’re integrating a number of different control schemes that they hope will let anyone play The Assembly comfortably.

The Assembly and VR Comfort Mode

The game begins as I’m being rolled through the heart of the Great Basin Desert in Nevada. I’m upright and strapped to a gurney Hannibal Lecter-style, a mechanic Kelion tells me will “cajole people into using their head as the camera.” Because locomotion is intentionally on rails for the first chapter, my only choice is to observe the scene around me.

We come to the mouth of The Assembly’s bunker, the underground home to a clandestine group of amoral scientists and researchers, the sort of people that ran the Japanese Unit 731 back in WW2. A man and woman talk openly about who I am, and how long they think I’ll last in the group. We roll closer and closer, eventually passing a dead crow laying face down in the sand. I’m scanned by two security cameras and let into a service elevator past heavy blast doors, of course never glimpsing my captors, which from the dialogue sounds like they were fans of my medical work. Aha, so I’m a doctor. We go down several levels, passing open windows showing patients, each successively worse off than the last. Coming to a halt, we roll out of the elevator shaft and into a vast complex. They’ve already said too much, and they drug me once more.

![The Assembly - Screenshot 16 - CH1]()

For the next chapter, Senior Designer Jackie Tetley gave me the choice. Did I want to play traditional style, where the gamepad’s left stick controls yaw, (a choice John Carmack calls “VR poison”), or did I want to give the new VR comfort mode a spin?

Choosing comfort mode, I was dropped back into the Bunker, this time in a closed-off lab complete with emergency eye washers, and medical storage of all types. Here I can move around and test it out.

See Also: Stanford Unveils ‘Light Field Stereoscope’ a VR Headset to Reduce Fatigue and Nausea

As opposed to traditional FPS gamepad control schemes, my right stick on the PS4 gamepad now acted as a ‘snap-to camera’, rotating me instantly 90 degrees to my left or right. Although not ideal for the sake of maintaining a contiguous feel in the world, my stomach thanks me for the option to forgo the sickening turns that I know first-hand can turn you off of VR for the rest of the day.

In comfort mode, my head tracking gently pulls my character left or right according to where I’m looking, much like you would in real life. This is much more subtle, and eventually faded into the background of exploring the space for any clue of how to get out, and who I was.

![The Assembly - GC Screen - 05]()

The last comfort mode mechanic was a teleport option that lets you pre-select the way you want to face, so that you can jump to anywhere and immediately look the direction you need to. Tetley tells me that it still isn’t perfected, as the mode can still break the game if you teleport on top of boxes or other items, since the game doesn’t incorporate jumping. I can’t say I liked using it personally, because it brings up (necessarily so) an icon, which in the current build is a floating metal ball studded with arrows. Select the arrow with your right stick, and change the distance of it with your left. The floating icon was easy to use, and teleporting was by no means jarring, but the icon itself was physically intrusive in the space. Thankfully though, all of these control schemes are optional, and can be ignored or utilized according to individual preferences.

Kelion and Tetley assured me that none of this is final however, as they want to include a wider range of movement controls in the game at launch to satisfy more gameplay styles.

![The Assembly - Screenshot - 14 - CH3]()

But what about large tracking volumes that let you actually walk in the virtual space, a la HTC Vive and the new Oculus Rift? Isn’t that a sort of ‘VR comfort mode’ too? Kelion responded:

“The truth is when it comes to the HTC Vive, this is something we’ve been implementing for control pads for Oculus, and for Playstation Morpheus. We’ve had the hardware for longer. We also know the Oculus is shipping with the pad and not Touch, and we don’t even know what the Morpheus is shipping with. We have to assume in terms of utility that we need to make it work well on the gamepad.”

Although not in the current build, nDreams doesn’t put HTC’s Lighthouse tracked controllers or Oculus’ Touch out of the realm of possibility.

nDreams has targeted all major VR headsets for The Assembly‘s release, including HTC Vive, Oculus Rift, and Playstation Morpheus.

For an updated gameplay close-up with commentary by Senior Designer Jackie Tetley, take a look at the video below.

The post Hands On: ‘The Assembly’ Offers New ‘Comfort Mode’, a Multi-Pronged Attack on Sim-Sickness appeared first on Road to VR.

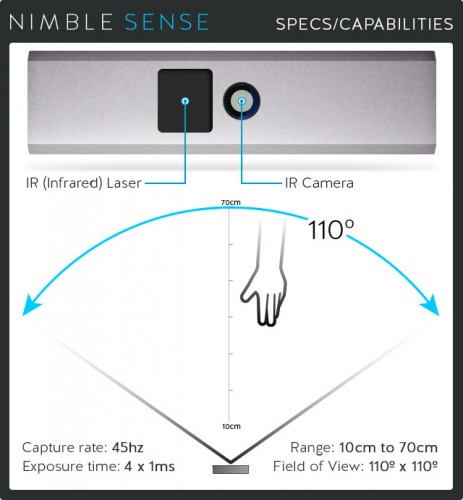

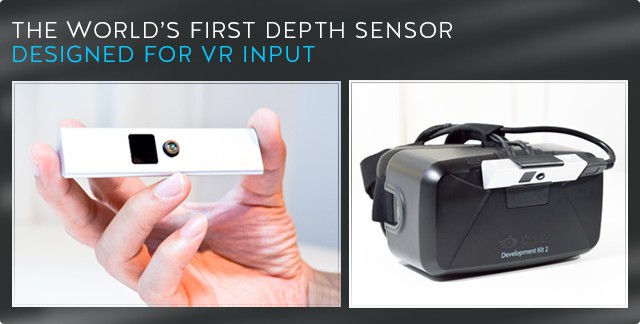

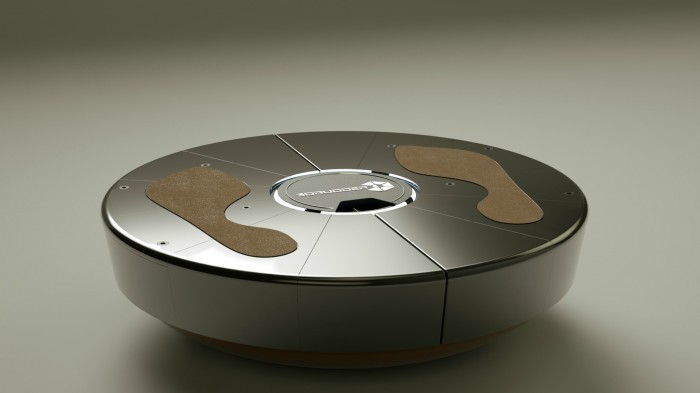

Our SVVR Conference and Expo 2014 coverage continues with a LiveStream of “User Input and Locomotion in VR”, a panel discussion which aims to explore what challenges, solutions and challenges there might be to allow users of virtual reality to interact with these new and exciting spaces.

Our SVVR Conference and Expo 2014 coverage continues with a LiveStream of “User Input and Locomotion in VR”, a panel discussion which aims to explore what challenges, solutions and challenges there might be to allow users of virtual reality to interact with these new and exciting spaces.

Linda Lobato is the CEO and co-founder of

Linda Lobato is the CEO and co-founder of